Artificial Intelligence is having a moment. It’s been thinking about it for a while. In the past decade alone, AI has learned to hear (Alexa), talk (Google translate), see (facial recognition) and move (driverless cars).

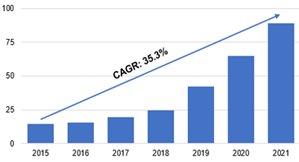

What’s abundantly clear is that the AI market has grown significantly, at a 35% CAGR since 2015.

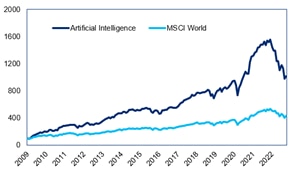

This has made AI one of the best performing of the 90 themes Citi Research tracks in the Global Theme Machine, outperforming the MSCI World Index significantly over the last decade (fig. 2).

Historical AI revenue ($bns) |

AI Cumulative Returns |

|

|

| Source: Average of IDC, FBI, GVR estimates |

© 2022 Citigroup Inc. No redistribution without Citigroup’s written permission. Source: Citi Global Insights |

CGI talked to three leading experts on AI: Professor Lukasz Szpurch, professor of mathematics at the University of Edinburgh and director of the finance and economics programme at the Alan Turing Institute, Professor Danilo Mandic, professor of signal processing, department of electrical and electronic engineering at Imperial College, and Prag Sharma, global head of artificial intelligence at Citi’s Artificial Intelligence Centre of Excellence. The full report features wide ranging interviews with the three, offering valuable insights into the areas of AI innovation that most excite them along with more detail on their particular areas of focus.

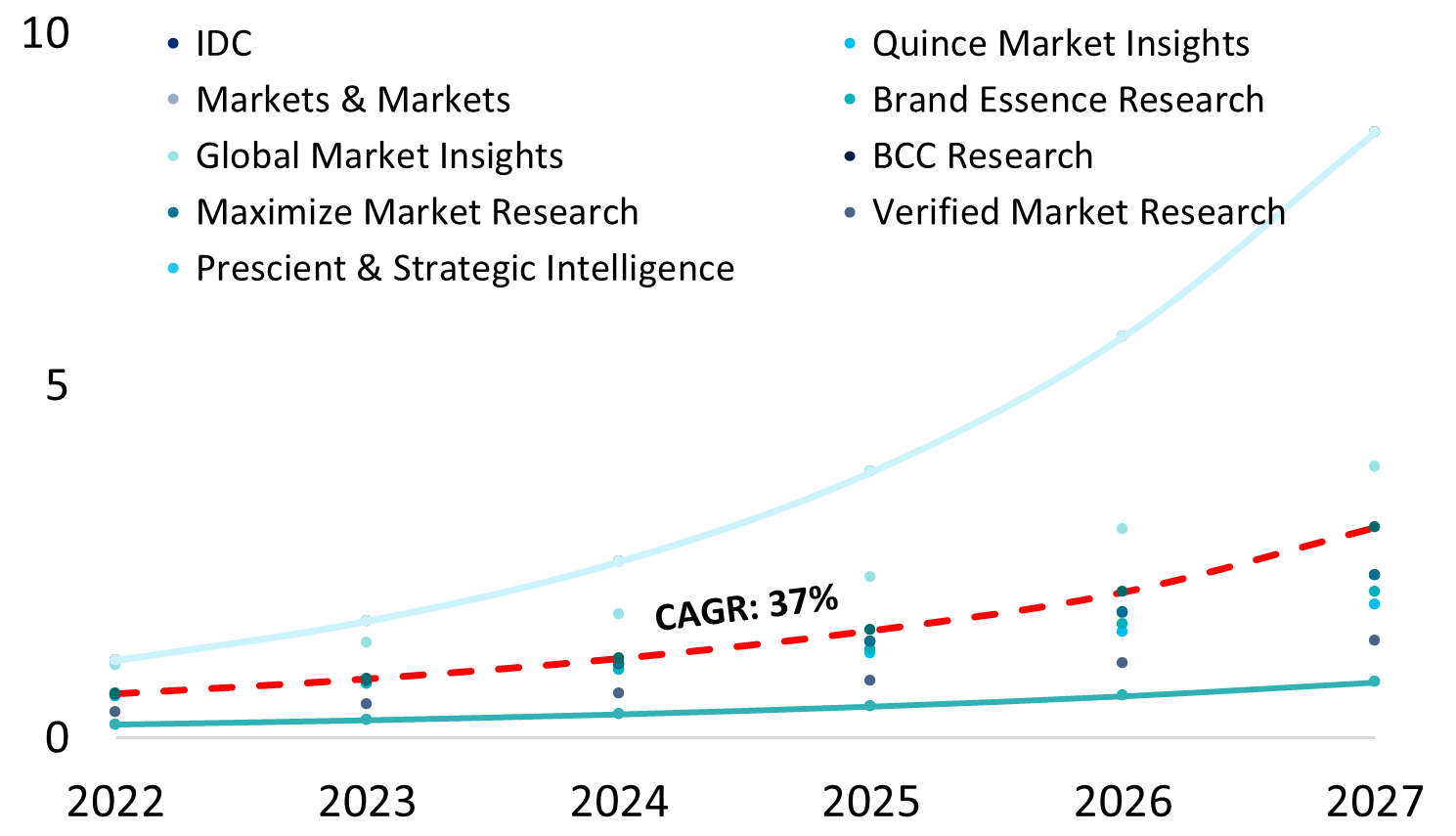

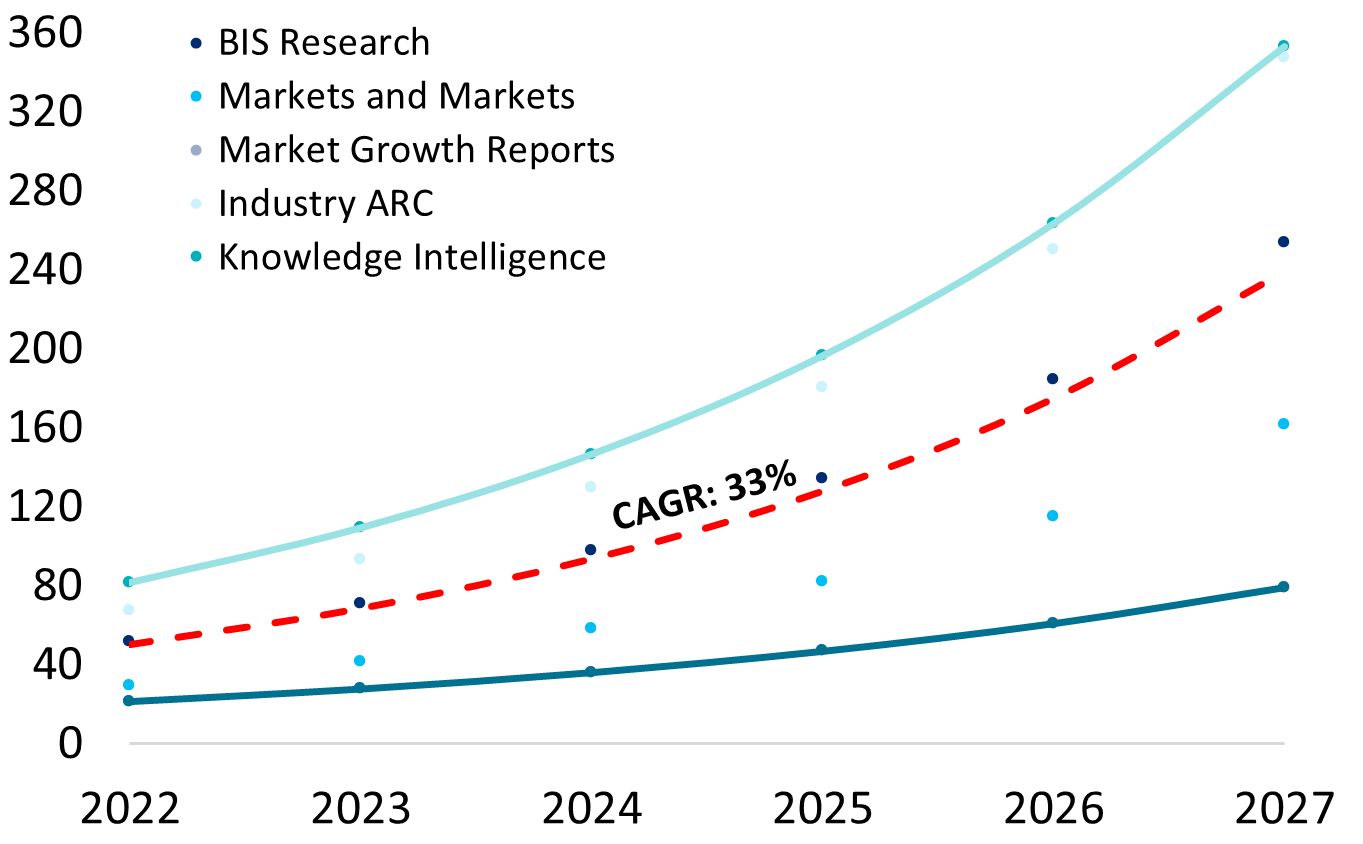

CGI also looked at 10 TAM (Total Addressable Market) AI estimates which show and average growth (CAGR) of between 20.1% and 39.7% (that’s somewhere between fast and very fast). This growth outlook is attracting lots of capital from VCs and corporates.

And the capital inflow is driving more innovation. This matters for companies, leaders and investors. The full report goes into greater depth on 10 areas of innovation that look particularly interesting.

Here’s a quick run through of those 10. The full report also contains proprietary data on these areas:

Large language models

Language models are in essence models that predict what word or series of words

come next in a sentence. They use large neural networks in order to determine the context of words and provide probability distributions as to which words go next.

| Large Language Models |

|

|

Introduction to Large Language Models https://docs.cohere.ai/docs/introduction-to-large-language-modelsbSource: co:here |

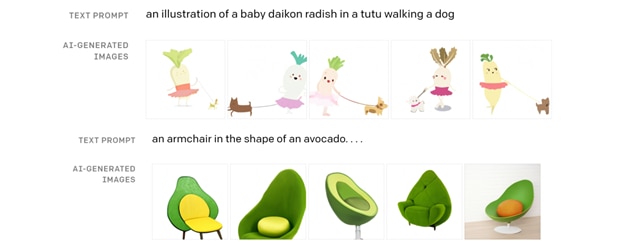

AI Art

This is exactly what it sounds like - images and artwork generated by AI from an initial text description.

Now, complicated artistic renderings of never-before-seen images can be generated based on a simple natural language prompt.

Examples of Images Generated from Captions by DALL-E (2021) |

|

|

DALL·E: Creating Images from Text https://openai.com/blog/dall-e/ Source: OpenAI |

Graph Neural Networks (finding patterns in relationships)

Graph Neural Network (GNN) is a class of artificial neural networks, deep learning method designed to derive an understanding of the data described by graphs, and subsequently perform prediction tasks.

GNNs apply the great predictive power of deep learning to rich data structures expressed in graphs.

Dataflows in Three Types of GNNs |

|

| Source: Bronstein et al (2021) |

Quantum for AI

The probabilistic nature of quantum computers makes them incredibly powerful when it comes to solving certain types of problems, specifically optimization, machine learning, simulation and cryptography.

The full report investigates how quantum computing will potentially be able to enhance AI capabilities in two main subfields– natural language processing and artificial neural networking.

Quantum Computing Total Addressable Market (in $bns)* |

|

|

© 2022 Citigroup Inc. No redistribution without Citigroup’s written permission. *Average of aggregated third-party forecasts Source: Citi Global Insights, Various sources |

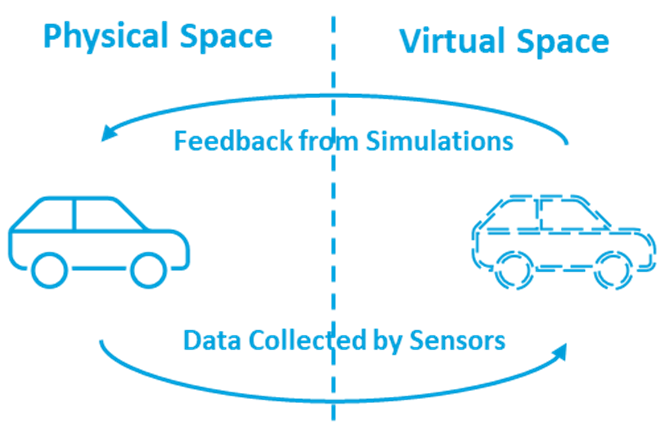

Digital Twins

One of the futuristic concepts realized by AI is the digital twin – a virtual representation of physical products (e.g., a car) and production processes that allows real-time data communication between the physical world and the virtual world.

How Digital Twins Work |

|

|

© 2022 Citigroup Inc. No redistribution without Citigroup’s written permission. Source: Citi Global Insights |

Digital twinning is identified as one of the fastest growing AI-enabled opportunities. Five independent TAM sources estimated the market to be $8.6 billion in 2022.

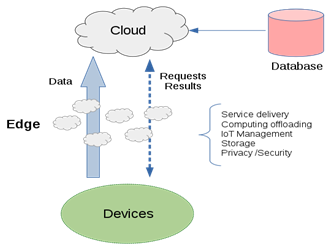

Edge AI

Edge Computing is the idea of bringing the computing required by internet connected devices nearer to where the data is generated to the edge. More specifically it can be described as part of a distributed computing topology where information processing is located close to the edge, where things and people produce or consume that information.

Edge AI is simply an application of this to AI, or in other words, the idea that AI devices will process data more locally at the source or at the edge of the network.

How Edge Computing Works |

|

| Wiki Commons (Edge computing paradigm)

https://commons.wikimedia.org/wiki/File:Edge_computing_paradigm,_2019-07-03.svg Source: Wiki Commons |

Swarm Intelligence

Swarm Intelligence (SI) is a subfield of AI inspired by swarm behaviour.

A plethora of SI algorithms have been proposed based on swarm behaviours of various species since 2000, and the amount of research gone into the area has surged over the years. Government agencies are among the first movers in the SI space.

Swarm Intelligence Total Addressable Market (in $mns)* |

|

|

© 2022 Citigroup Inc. No redistribution without Citigroup’s written permission. *Average of aggregated third party forecasts Source: Citi Global Insights, Various sources |

Five independent TAM sources for the global SI market averaged $50 million in 2022.

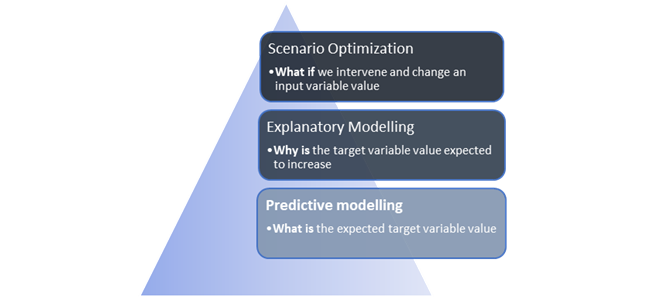

Causal AI

Despite AI’s ability to perform certain impressive tasks, a consensus amongst leading experts is that present-day deep learning is less intelligent than a two-year-old child.

Research is now shifting to developing general ML and deep learning architectures and training frameworks for addressing tasks like reasoning, planning, capturing causality, and obtaining systematic generalization. Developing this architecture is necessary development towards autonomous intelligent agents.

We believe we are at an inflection point where causal inference is moving from academic areas such as healthcare and social science research to the computer science community and its strong links to corporate production systems.

Hierarchy of causal inference- data science perspective |

|

| Source: Citi Global Data Insights |

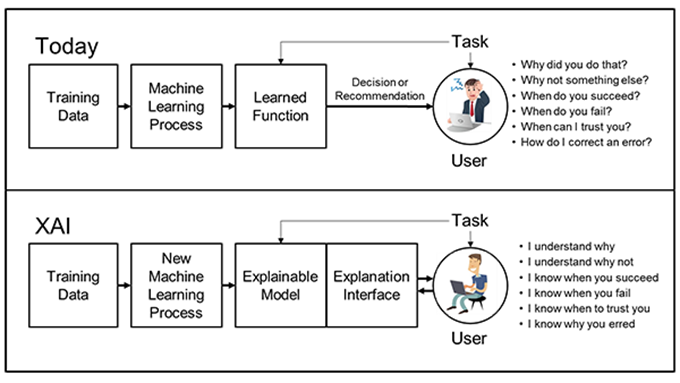

Explainable AI

Machine Learning (ML) models have demonstrated their capabilities in generating insightful predictions especially in the recent years. However, the next logical question given a decent predictive model is understanding what drives the model.

This has spurred the rapid growth of Explainable AI (XAI) literature lately. Which variables influence the most in the predictions of the model? How and why does the model generate a specific prediction? XAI, helps us answer these questions and with the knowledge we can improve model performance further.

Standard ML vs XAI |

|

| Source: DARPA |

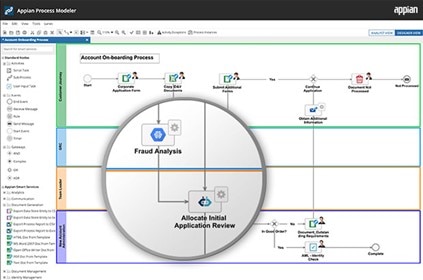

Low Code / No Code Development Platforms

Imagine a world where we don’t need to get lost in the weeds with programming languages but instead can build algorithms in the same way we build Lego architectures.

How Low-Code Platform Appian Works |

|

| Source: Appian |

The low/no-code development platform works in an extremely intuitive way – users can drag-and-drop pre-defined widgets and modules in a graphical user interface (GUI) to build process models, logic flows and applications.

CGI examined 10 independent TAM estimates for low/no-code development platform market, the TAM estimates for 2022 averaged $21 billion. Research provider Research and Markets expects the market to reach $191 billion by 2030.

In short- AI time is now. To access the full report, if you are a Velocity subscriber, please log in here.

Citi Global Insights (CGI) is Citi’s premier non-independent thought leadership curation. It is not investment research; however, it may contain thematic content previously expressed in an Independent Research report. For the full CGI disclosure, click here.