It’s clear that data centers will be a key part of the infrastructure needed to support increased deployment of artificial intelligence. But what will the impact be on data center capex and resource consumption? Such questions are fundamental to understanding the potential shape of the AI revolution.

The fact is that data centers (excluding crypto) accounted for just 0.9-1.3% of global electricity usage in 2021, and this has been pretty stable in recent years.

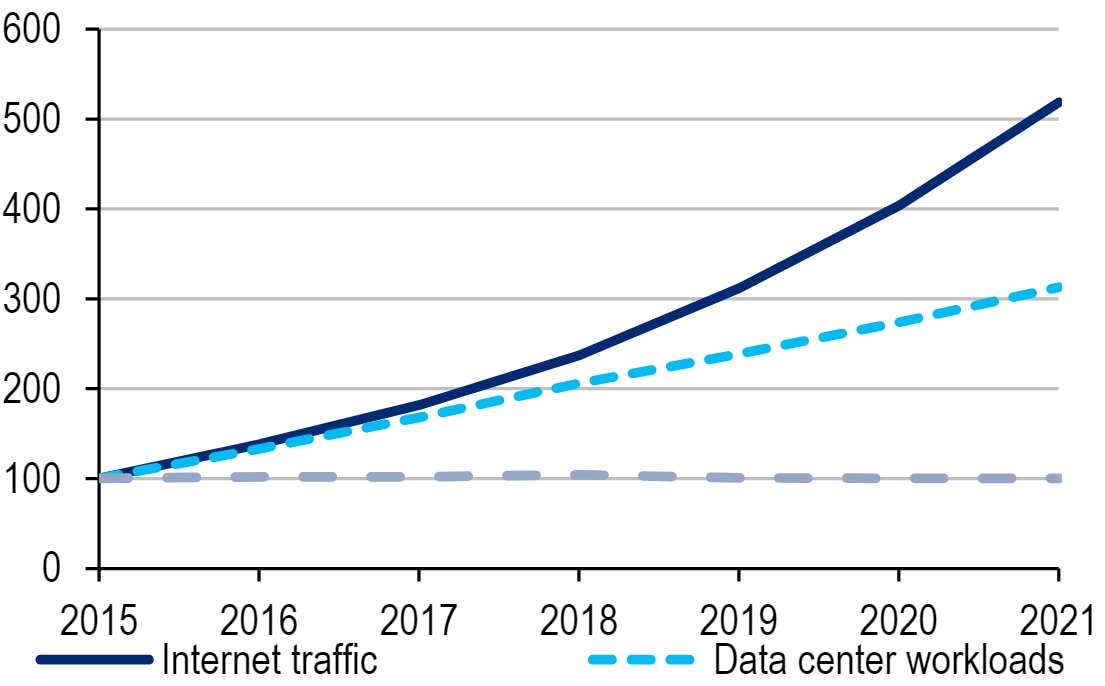

Historical predictions that data center energy use would rise exponentially with data consumption have so far not been borne out even as Internet traffic has grown exponentially over time, as the chart below shows.

Internet traffic is growing exponentially

© 2023 Citigroup Inc. No redistribution without Citigroup’s written permission.

Source: Citi Research, Company Reports

This has been due in part to the shift to hyperscale, server efficiency, the use of virtual servers, as well as a focus on performance per watt. Taken together, these factors mean data center energy usage has been kept largely in check.

Data center workloads are more than 10x higher than in 2010 and 3x higher than in 2015, despite limited increase in power consumption, a near 25% annual improvement in energy efficiency.

According to an MIT report (11 Nov-19, see here), “OpenAI found that the amount of computational power used to train the largest AI models had doubled every 3.4 months since 2012”. This means that AI compute has outpaced Moore’s Law over the past decade.

According to Citi’s technology research team, data management, processing, and cloud investment is the second-largest area for investment related to AI.

Cooling Off

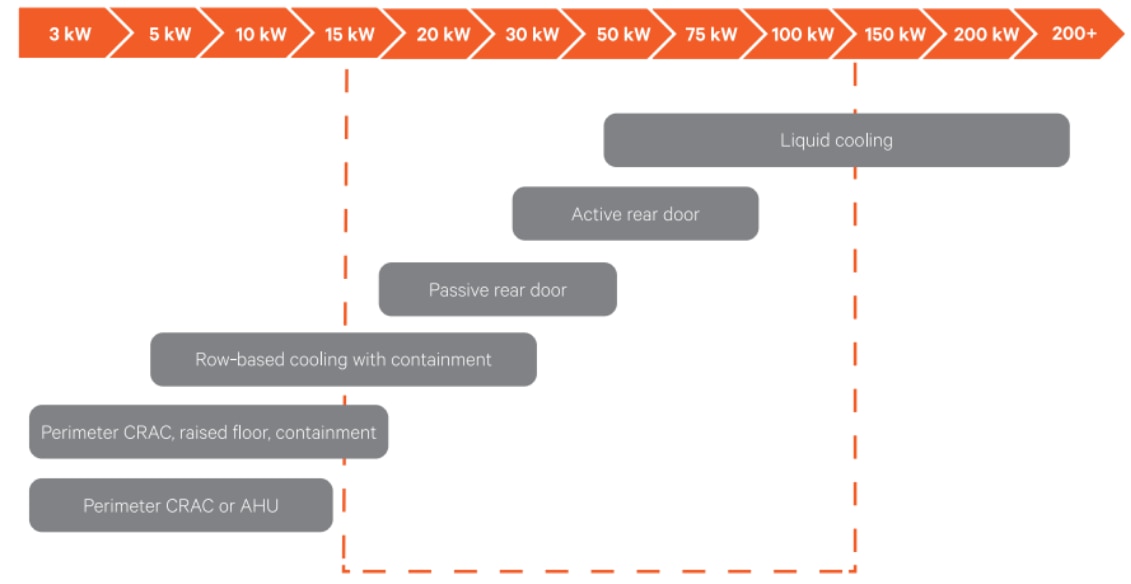

The constraining factors of GPU performance are power draw and heat dissipation. Heat density becomes a bigger issue as components are more tightly packed to limit latency.

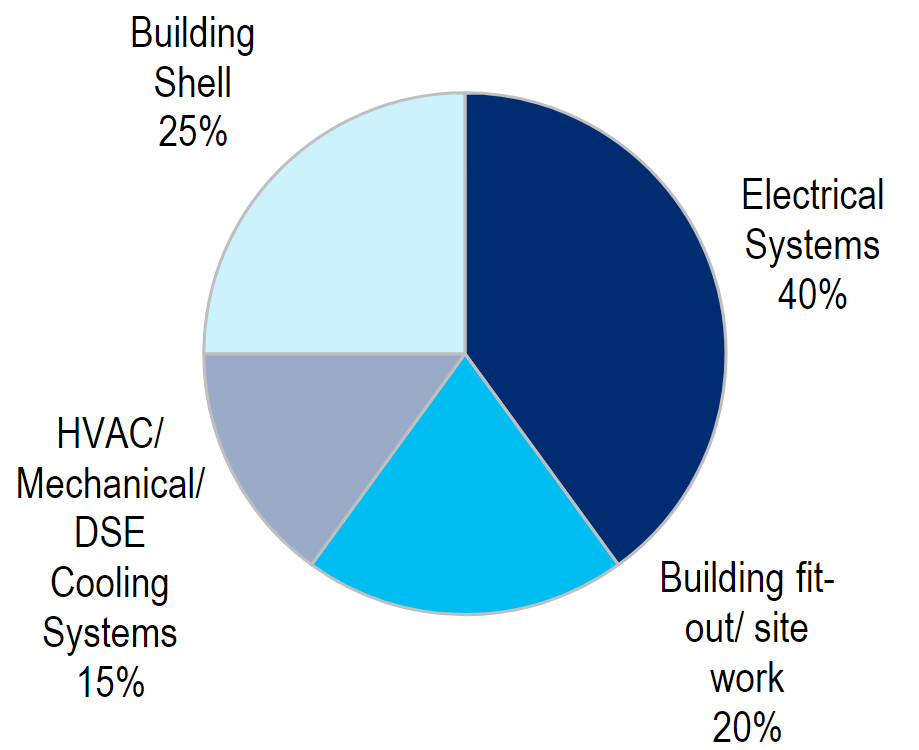

Electrical and cooling is a major part of data center cost

© 2023 Citigroup Inc. No redistribution without Citigroup’s written permission.

Source: Digital Realty, Citi Research

Cooling is already seen as a key means to make data centers more efficient. AI arguably makes this even more important, the report says. Well over 99% of energy entering a server exits as heat. As server density increases, cooling becomes even more challenging.

Air-based cooling systems lose their effectiveness when rack densities exceed 20 kW, at which point liquid cooling becomes the viable approach.

© 2023 Citigroup Inc. No redistribution without Citigroup’s written permission.

Source: Citi Research, Vertiv

Renewables

Renewable power use is seen as the joint top priority in data center sustainability, along with cooling. According to the New Scientist (28 Feb-23, see here), less than 25% of AIs use low-carbon energy sources, although the Citi Research report notes that, according to the IEA, Amazon, Microsoft, Meta, and Google are the four largest corporate buyers of renewable PPAs.

The Citi Research report says increased scrutiny of the carbon emissions of AI, and of data centers more broadly, will support higher levels of corporate renewable PPAs even if actual power demand growth can be kept in check with efficiency gains.

For more information on this subject, please see the report, dated 14 July 2023, here: Global Electrical Equipment - AI Unleashed: Data centers, power consumption, and the impact of AI

Citi Global Insights (CGI) is Citi’s premier non-independent thought leadership curation. It is not investment research; however, it may contain thematic content previously expressed in an Independent Research report. For the full CGI disclosure, click here.